Abstract

The advent of artificial intelligence (AI) presents unique challenges and opportunities that intersect with constitutional rights, particularly in a diverse nation like India. As AI technologies increasingly influence various aspects of daily life—from governance and healthcare to education and social interactions—it is imperative to critically assess their implications through the lens of the Indian Constitution. This paper delves into the intricate relationships between AI, individual rights, and constitutional mandates, particularly focusing on privacy, freedom of speech, and equality. It was reinforced by the Supreme court of India, the recognition of privacy as a fundamental right in Justice K.S. Puttaswamy v. Union of India, highlights the need for robust data protection measures in the face of pervasive surveillance enabled by AI technologies.

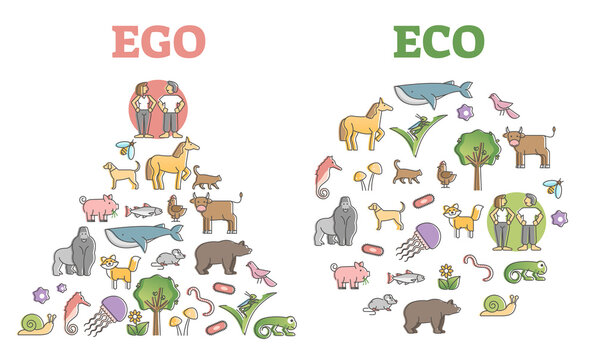

Furthermore, the role of AI in content moderation raises critical concerns about potential censorship and the suppression of dissent, which can undermine the very fabric of democratic discourse enshrined in Article 19. Moreover, the risk of algorithmic bias poses significant challenges to the principle of equality, as AI systems trained on historical data may perpetuate existing societal inequalities. Addressing these issues requires a comprehensive regulatory framework that emphasizes accountability, transparency, and public engagement.

This paper proposes a multi-faceted approach to navigate the complexities introduced by AI, advocating for a legal and ethical framework that aligns technological innovation with constitutional values and societal welfare. By fostering an environment that prioritizes individual rights while embracing technological advancements, India can ensure that the deployment of AI serves as a tool for empowerment rather than oppression. Ultimately, this exploration aims to contribute to the ongoing discourse on balancing progress with the protection of fundamental rights in the digital age.

Introduction

The rapid advancement of artificial intelligence (AI) technologies has transformed various sectors globally, presenting both opportunities and challenges. In India, a country characterized by its diversity and commitment to democratic values, the intersection of AI and constitutional rights raises pressing questions about privacy, freedom of expression, equality, and accountability. As AI continues to permeate daily life—from governance and healthcare to finance and education—it is essential to critically examine how these technologies align with the rights enshrined in the Indian Constitution. This paper explores the intricate relationship between AI and constitutional rights in India, analyzing potential conflicts and proposing a regulatory framework that safeguards individual liberties in the digital age. By addressing these challenges, we aim to ensure that technological progress enhances rather than undermines fundamental rights.

The Indian Constitution: A Framework for Rights

Fundamental Rights:

The Indian Constitution, adopted in 1950, establishes a robust framework for fundamental rights designed to protect individuals from state excesses and promote social justice. These rights, outlined in Part III of the Constitution, include:

● Article 14: Right to equality

● Article 19: Freedom of speech and expression

● Article 21: Right to life and personal liberty of an individual

● Article 32: Right to constitutional remedies

These articles are foundational to the protection of individual rights in India, and their relevance is magnified in the context of AI technologies.

Article 14: Right to Equality: Article 14 guarantees equality before the law and prohibits discrimination on the grounds of religion, race, caste, sex, or place of birth. This principle is critical when considering the implications of AI, particularly in how algorithms can perpetuate existing biases and inequalities.

The Challenge of Bias in AI: AI systems are often trained on historical datasets that may contain biases reflective of societal prejudices. For instance, algorithms used in hiring processes might favor certain demographic groups while disadvantaging others. A notable example is the case of AI recruitment tools that were found to be biased against women and minority candidates, as they were trained on data that reflected a predominantly male workforce. To address these risks, it is essential to conduct regular audits and evaluations of AI systems to identify and rectify biases. Furthermore, including diverse teams in the development process can help identify potential blind spots and ensure that AI technologies promote equality rather than exacerbate existing disparities.

Article 19: Freedom of Speech and Expression: Article 19 protects the right to freedom of speech and expression, which is fundamental to any democratic society. However, the role of AI in moderating online content presents challenges to this right, particularly concerning censorship and the potential suppression of dissent.

The Role of AI in Content Moderation: Social media platforms increasingly employ AI algorithms to identify and remove harmful content, such as hate speech and misinformation. While these measures can enhance user safety, they also risk leading to the overreach of censorship, where legitimate discourse is stifled. This is particularly concerning in a democratic context, where the free exchange of ideas is essential for societal progress. For instance, in India, various instances have been documented where content related to political dissent has been disproportionately targeted for removal.

The opacity surrounding algorithmic decision-making processes can further complicate this issue, as users may find their voices silenced without recourse to challenge such actions. To navigate this challenge, it is essential to establish clear guidelines that define acceptable content while providing transparent avenues for users to appeal against wrongful takedowns. Ensuring that these policies are publicly accessible can help maintain the delicate balance between protecting individuals from harmful content and safeguarding their freedom of expression.

Article 21: Right to Life and Personal Liberty: The right to privacy has gained increasing recognition, particularly following the landmark Supreme Court ruling in Justice K.S. Puttaswamy v. Union of India (2017), which declared privacy a fundamental right under Article 21. This ruling carries significant implications for AI technologies that involve data collection, surveillance, and profiling.

The Challenge of Surveillance: AI technologies, such as facial recognition and data analytics, pose substantial threats to individual privacy. The implementation of these technologies for surveillance purposes, particularly by state actors, raises ethical questions about consent and personal freedoms. For example, cities employing facial recognition technology for security purposes must grapple with the implications of mass surveillance, as individuals may be monitored without their knowledge or consent. To safeguard privacy rights, a comprehensive legal framework is necessary to govern the use of surveillance technologies. Such a framework should prioritize informed consent, transparency regarding data collection and processing, and mechanisms to challenge invasive surveillance practices.

Article 32: Right to Constitutional Remedies: Article 32 empowers individuals to seek remedies when their fundamental rights are violated. This provision is particularly relevant in the context of AI, as automated decision-making processes can lead to unjust outcomes that affect individuals’ rights.

Legal Recourse in the Age of AI: As AI systems become increasingly integrated into critical decision-making processes—ranging from credit scoring to law enforcement—there is an urgent need for mechanisms that allow individuals to contest decisions made by AI. Establishing clear grievance redressal processes is vital to ensuring accountability and protecting individuals from arbitrary actions resulting from automated systems. For instance, if an AI algorithm denies an individual a loan based on biased data, there must be a transparent process in place to allow the individual to contest that decision. Legal frameworks must evolve to incorporate provisions that address the unique challenges posed by AI technologies, ensuring individuals can seek redress and justice.

The Impact of AI on Constitutional Rights

Privacy Concerns: The proliferation of AI technologies has led to increased data collection and analysis, often without adequate safeguards. Governments and corporations have unprecedented access to personal data, raising significant concerns about privacy rights.

Case Study: Data Mining in Healthcare: In the healthcare sector, AI systems analyze vast amounts of patient data to improve diagnostic accuracy and treatment outcomes. However, the collection and processing of sensitive health information without informed consent can lead to violations of privacy rights. Ethical considerations surrounding data use must prioritize patient autonomy and confidentiality.

For example, AI-driven healthcare solutions may aggregate patient data from various sources, often without explicit consent. To address these concerns, healthcare organizations must implement strict data protection measures and ensure that patients are fully informed about how their data will be used.

Freedom of Expression and Censorship: The role of AI in content moderation raises critical questions about the extent to which freedom of speech is protected. Algorithms designed to filter harmful content may inadvertently suppress legitimate expression, necessitating clear guidelines to prevent arbitrary censorship.

Case Study: Social Media and Political Speech: In India, instances have emerged where social media platforms disproportionately remove content related to political dissent or criticism of government policies. Such actions can undermine democratic discourse and stifle dissenting voices. Ensuring transparency in content moderation practices and providing avenues for users to appeal wrongful takedowns is vital for maintaining a healthy democratic space.

Moreover, social media platforms should adopt clear content moderation policies that define acceptable content and establish transparent processes for appeals. This approach can help protect users’ rights while addressing harmful content.

Discrimination and Bias: AI systems have the potential to exacerbate existing biases and inequalities. In a diverse nation like India, the risk of AI reinforcing discrimination against marginalized communities is a significant concern.

Case Study: AI in Recruitment: Recruitment algorithms, if not designed carefully, can favor candidates from certain demographics while disadvantaging others. This is particularly concerning in a context where caste and gender discrimination remain prevalent. AI systems must be rigorously tested for bias, and developers should implement measures to ensure fairness in hiring practices. Organizations using AI in recruitment must commit to transparency and regularly audit their systems for bias. Adopting inclusive hiring practices that prioritize a diverse pool of candidates can reduce the likelihood of discrimination. Additionally, creating guidelines that prioritize diversity in recruitment algorithms can further ensure equitable outcomes.

Regulatory Framework for AI in India

To navigate the complexities of AI and constitutional rights, a comprehensive regulatory framework is essential. This framework should address key areas such as data protection, algorithmic accountability, freedom of speech safeguards, and public awareness.

- Data Protection and Privacy: The Personal Data Protection Bill (PDPB) aims to provide a legal framework for data protection in India. Its effective implementation is crucial for safeguarding individual rights. Key provisions should include:

- Informed Consent: Organizations must obtain informed consent from individuals for data collection and processing. This enables individuals to make educated decisions regarding their data.

- Data Minimization: Organizations should only collect data that is necessary for their purposes, minimizing potential risks to individual privacy.

- Transparency: Organizations must disclose how AI systems operate, particularly regarding decision-making processes. Transparency is essential for accountability and public trust.

- Algorithmic Accountability: Establishing standards for algorithmic accountability is vital to mitigate the risks of bias and discrimination. This can be achieved through:

- Bias Audits: Conducting regular audits of AI systems can uncover and address biases in algorithms. To maintain objectivity, these audits should be carried out by independent third parties.

- Transparency Reports: Organizations should be required to publish reports detailing the performance of AI systems, including bias detection and remediation efforts.This fosters accountability and public trust.

- Freedom of Speech Safeguards: To ensure that AI does not infringe on freedom of speech, it is essential to establish:

- Clear Content Moderation Policies: Guidelines that define acceptable content and provide avenues for appeal against wrongful takedowns are necessary. Users must have the right to challenge decisions made by AI systems.

- Public Oversight: Involving civil society in monitoring AI-driven content moderation practices can enhance accountability and transparency. Public forums for discussion can help address concerns related to censorship.

- Public Awareness and Education: Enhancing public awareness about AI technologies and their implications is crucial for safeguarding rights. This can be achieved through:

- Educational Programs: Incorporating AI literacy into educational curricula can empower individuals to understand their rights in the digital age. Schools and universities should include discussions on the ethical implications of AI.

- Workshops and Campaigns: Conducting workshops to raise awareness about data privacy, algorithmic biases, and the importance of protecting constitutional rights can foster an informed citizenry.

International Perspectives on AI and Rights

Examining international frameworkscan provide valuable insights into how other nations are addressing the challenges posed byAI. The European Union’s General Data Protection Regulation (GDPR) sets a high standardfor data protection, emphasizing individual rights and accountability.

Key Features of GDPR:

- Right to Access: Individuals have the right to access their personal data held by organizations, promoting transparency and accountability.

- Right to Erasure: The right to be forgotten allows individuals to request the deletion of their data under certain circumstances, empowering them to control their digital footprint.

- Data Protection Officers: Organizations are required to appoint data protection officers to ensure compliance with data protection laws, promoting accountability.

India could benefit from adopting similar principles while tailoring them to its unique socio-cultural context. By learning from international best practices, India can enhance its regulatory framework for AI and data protection.

Challenges and Recommendations

While the proposed regulatory framework offer a roadmap for addressing the challenges posed by AI, several obstacles must be overcome to ensure its successful implementation.

- Capacity Building: Developing expertise in AI and data protection among regulators law enforcement, and civil society is crucial. Training programs and workshops should be conducted to build capacity and understanding of AI technologies, their implications, and best practices for regulation.

- Multi Stakeholder Engagement: Engaging multiple stakeholders, including government agencies, private sector organizations, civil society, and academia, is essential for creating a comprehensive regulatory framework. Collaborative approaches can help address diverse perspectives and ensure that the regulatory framework is effective and inclusive.

- Continuous Monitoring and Evaluation: The rapidly evolving nature of AI necessitates continuous monitoring and evaluation of the regulatory framework. Regular assessments can help identify gaps, challenges, and emerging issues, allowing for timely adjustments to the regulatory landscape.

- Promoting Ethical AI Development: Encouraging the development of ethical AI technologies is crucial for safeguarding rights. Organizations should adopt ethical guidelinesfor AI development that prioritize fairness, accountability, transparency, and inclusivity. Collaboration between technologists and ethicists can help ensure that AI systems are designed with human rights considerations in mind.

Conclusion

The convergence of artificial intelligence and constitutional rights in India offers both significant opportunities and notable challenges. As AI technologies continue to evolve, ensuring the protection of fundamental rights becomes increasingly critical. The proposed regulatory framework emphasizes the need for accountability, transparency, and public engagement in navigating the complexities posed by AI. By prioritizing individual rights and societal welfare, India can harness the potential of AI while safeguarding the democratic principles enshrined in its Constitution.

A collaborative approach involving government, civil society, and the private sector will be essential in shaping a future where AI serves as a tool for empowerment rather than oppression. In navigating the digital age, it is imperative to recognize that technology should enhance human rights, not introduce newforms of oppression. By fostering an environment where AI development is guided by ethical principles and robust regulatory oversight, India can ensure that its journey into the future upholds the dignity and rights of all its citizens.

References:

- The Constitution of India (1950).

- Justice K.S. Puttaswamy v. Union of India, W.P. (Civil) No. 494 of 2012 (Supreme

Court of India).

- Personal Data Protection Bill, 2019, Bill No. 373 of 2019 (India), available at

https://www.prsindia.org/billtrack/personal-data-protection-bill-2019.

- Proposal for a Regulation on a European Approach to Artificial Intelligence, COM

(2021) 206 final (European Commission), available at

https://ec.europa.eu/digital-strategy/our-policies/european-ai-act_en.

- Algorithmic Justice League, The Dangers of AI Bias (2020), available at

https://www.ajl.org/dangers-of-ai-bias.

- Ryan Binns, Fairness in Machine Learning: Lessons from Political Philosophy, in

Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency 1

(2018), available at https://dl.acm.org/doi/10.1145/3287560.3287598.

- Kate Crawford, Artificial Intelligence’s White Guy Problem, The New York Times

(June 26, 2016), available at

https://www.nytimes.com/2016/06/26/opinion/sunday/artificial-intelligences-white-gu

y-problem.html.

- Jack Oberlander & Surya Raghavan, AI and the Right to Privacy in India, Journal of

Data Protection & Privacy (2021), available at https://www.henrystewartpublications.com/jdpp. - Niloofar Shadloo & Aashish Pujari, Algorithmic Accountability and the Indian Legal

Framework, Indian Journal of Law and Technology (2021), available at https://ijlt.in/. - NASSCOM, AI in India: Opportunities and Challenges (2020), available at

https://nasscom.in/knowledge-library/ai-india-opportunities-and-challenges. - Anirudh Sethi, AI and Human Rights: An Indian Perspective, International Journal of

Human Rights and Constitutional Studies (2020), available at

https://www.inderscienceonline.com/doi/abs/10.1504/IJHRCS.2020.107295.